This is the first in a series of blog posts on the topic of evaluation in the context of the ABE program.

Program evaluation is a valuable tool when seeking to strengthen program quality and understand program outcomes. We evaluate programs by systematically collecting and analyzing data to answer questions about implementation and effectiveness.

ABE has engaged in a range of evaluation efforts over the past 15 years. Early on in the program, independent evaluations of the ABE Greater Los Angeles Area and ABE San Diego program sites assessed the quality and usefulness of program materials, investigated instructional strategies, and explored student outcomes. In 2016, the Amgen Foundation contracted with WestEd to conduct a rigorous study of student outcomes. This evaluation found that after completing the full sequence of foundational ABE labs:

- Students improved their abilities to interpret experimental results and their knowledge of biotechnology skills.

- Students gained new ideas about what happens in science laboratories and what science is.

- Students increased their interest in science and science education.

As ABE continues to grow and evolve, evaluating the program is no simple task. With the program now operating at 22 sites in 13 countries and including a wide range of activities for both educators and students, how do we conduct an evaluation that is relevant and reliable to so many stakeholders? Since 2017, the ABE Program Office’s internal evaluators Sophia Mansori and Jennifer Jocz have been managing and conducting evaluation activities across and among sites, working to understand the ABE program as a whole and the activities of individual sites.

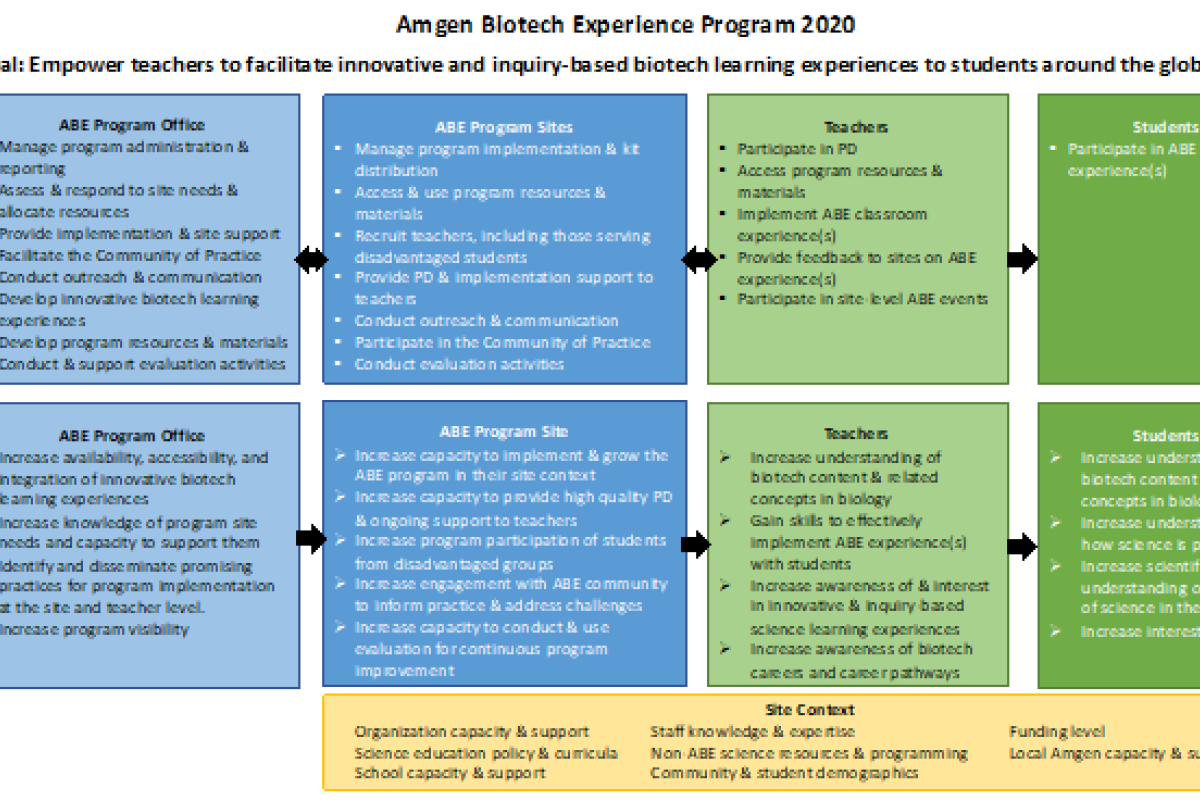

How do we decide what to evaluate and what questions to focus on? A program logic model can help to articulate different elements of a program and how we expect them to contribute to outcomes for different participants. The current ABE Program logic model illustrates how activities by the Program Office, sites, teachers, and students work together to (ideally) lead to various outcomes. In addition, we developed an ABE Program model that focuses exclusively on implementation and identifies elements of the program that are “core” or common across program sites, and those that that may be a result of program scale-up, adaptation, and innovation.

Click here to view a larger version of the logic model.

To understand both implementation and outcomes outlined in the logic model, as well as the connections between the two, the evaluators at the ABE Program Office continue to use a number of approaches:

- We use the ABE Program Model as a tool to understand the progress that individual sites and the program as a whole have been making in implementation.

- We are conducting case studies to explore individual sites’ adaptations, successes, and challenges with ABE. We also plan to conduct cross-site studies to learn about specific program elements across sites.

- We are conducting a study of how ABE teachers have begun to use LabXchange as a resource.

- We work with individual sites to support their own evaluation efforts, particularly in learning about teacher professional learning and student outcomes.

- We collect and analyze data about ABE Program Office activities to provide feedback and help to improve how the global program operates and is supported.

Stay tuned for future blog posts where we will share more information about these various evaluation approaches as well as what we have learned through our evaluation efforts.